Maturity Assessment

As organizations increasingly rely on complex digital systems and artificial intelligence (AI) technologies, the need for structured and continuous evaluation of cybersecurity and AI governance practices becomes paramount.

Maturity Assessments

Maturity assessments offer a systematic approach to measuring the effectiveness, consistency, and adaptability of an organization’s cybersecurity and AI risk management capabilities. By evaluating core domains, such as governance, risk, asset management, monitoring, and incident response, these assessments provide a comprehensive snapshot of both operational strengths and areas requiring improvement. In the context of cybersecurity, maturity assessments align with frameworks like NIST CSF to enhance resilience, ensure regulatory compliance, and reduce exposure to cyber threats. Similarly, AI maturity assessments grounded in frameworks like the NIST AI RMF help organizations identify and mitigate risks related to bias, data integrity, model robustness, and ethical considerations. Together, these evaluations support informed decision-making, promote accountability, and lay the foundation for sustainable digital trust and responsible technology adoption.

At Assessed Intelligence, we view cybersecurity and AI evaluations through the lens of process and practice which represent two critical but distinct dimensions of organizational maturity.

Process refers to the formal, documented policies, procedures, and governance structures that guide how tasks should be performed, such as incident response plans, access control frameworks, or AI model risk management protocols. These processes ensure consistency, accountability, and auditability across the organization.

Practice, on the other hand, reflects how these processes are actually carried out in day-to-day operations. It includes the behavior, skills, and consistency of personnel—whether teams follow secure coding practices, conduct fairness checks in AI models, or enforce access controls effectively.

Maturity Baseline Assessment

Evaluate your organization's Cybersecurity and AI Risk maturity.

Assessment Information

All fields are required to submit.

Maturity Levels

| Level | Process (Policies) | Practice (Execution) |

|---|---|---|

| Level 1 Initial |

Undefined or non-existent processes; no formal policies. | Informal, undefined capabilities; ad hoc execution. |

| Level 2 Repeatable |

Documented in some areas; outdated policies (>2 years). | Consistent in pockets; not standardized org-wide. |

| Level 3 Defined |

Policies/procedures are standardized, approved, and aligned with strategy (<10% exceptions). | Roles are assigned, trained, and standardized. |

| Level 4 Managed |

Compliance is enforced; processes monitored for effectiveness (<5% exceptions). | Capabilities are measured, evaluated, and predictable. |

| Level 5 Optimizing |

Policies evolve with change; metrics tracked; <1% exceptions. | Continuous improvement driven by internal/external insights. |

Maturity Levels

| Level | Process (Policies) | Practice (Execution) |

|---|---|---|

| Level 1 Initial |

Undefined or non-existent processes; no formal policies. | Informal, undefined capabilities; ad hoc execution. |

| Level 2 Repeatable |

Documented in some areas; outdated policies (>2 years). | Consistent in pockets; not standardized org-wide. |

| Level 3 Defined |

Policies/procedures are standardized, approved, and aligned with strategy (<10% exceptions). | Roles are assigned, trained, and standardized. |

| Level 4 Managed |

Compliance is enforced; processes monitored for effectiveness (<5% exceptions). | Capabilities are measured, evaluated, and predictable. |

| Level 5 Optimizing |

Policies evolve with change; metrics tracked; <1% exceptions. | Continuous improvement driven by internal/external insights. |

Generating Report...

Your custom analysis is loading, this may take up to 30 seconds.

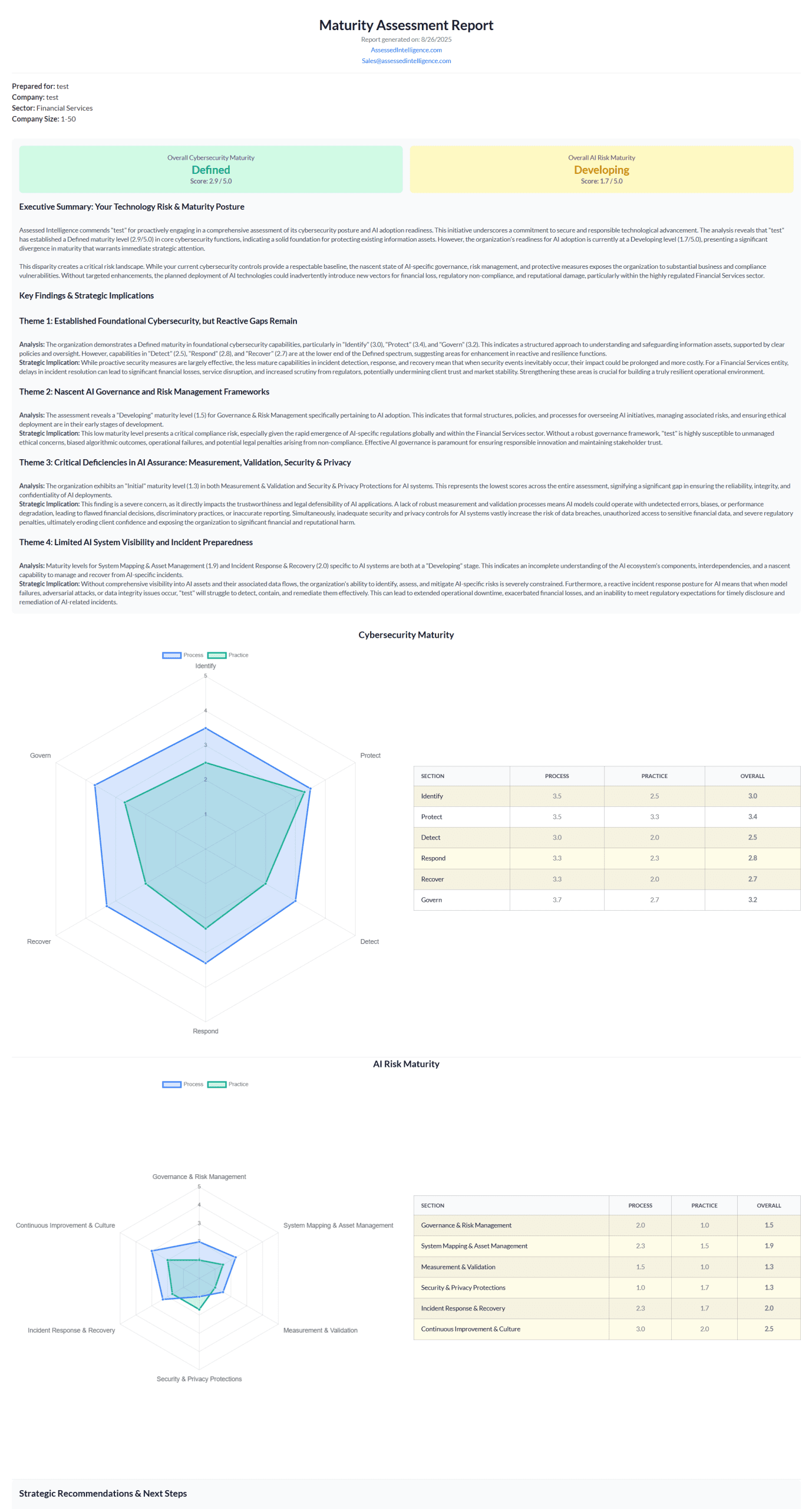

Cybersecurity Maturity

AI Risk Maturity

AI Section Incomplete

You have not filled out the AI Risk section. Your report will be more accurate with this information. Do you wish to submit the report without it?

© 2025 All Rights Reserved.